Objectives

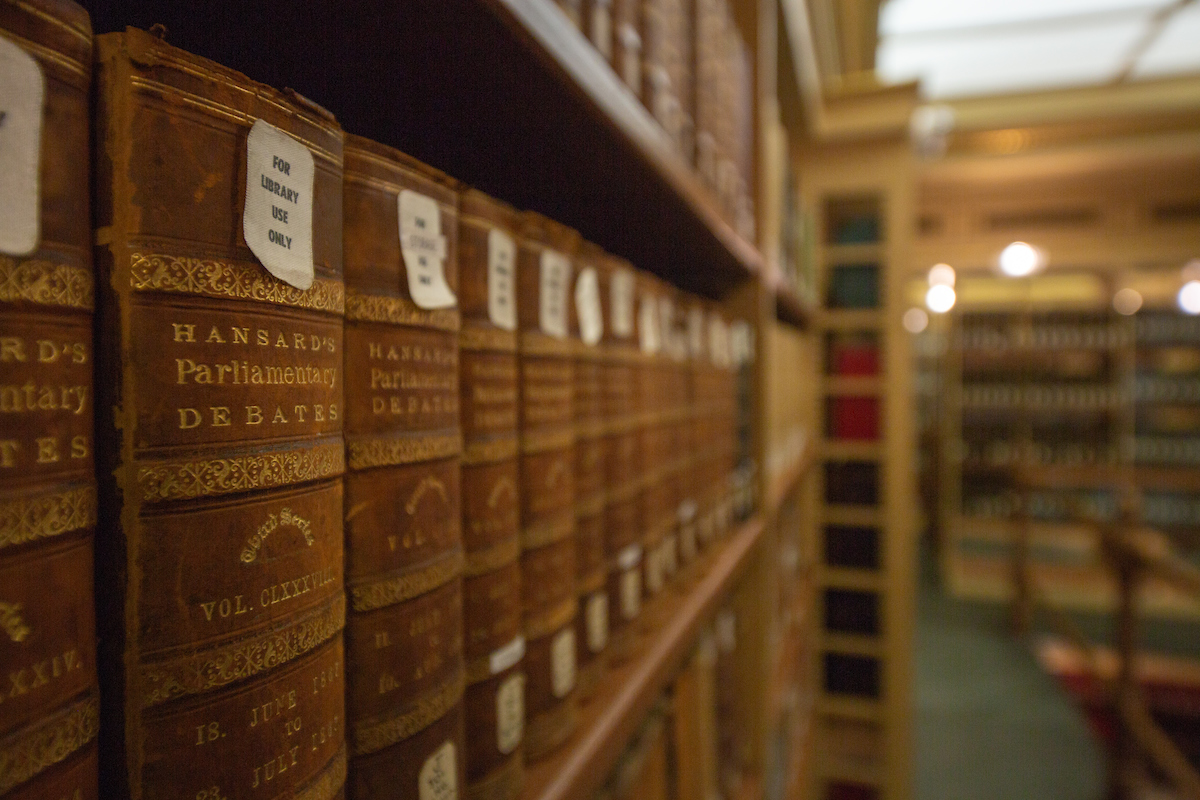

This project aimed to integrate large language models (LLMs) with search engines to recommend reliable references from authoritative sources such as DBLP and arXiv. By combining the capabilities of search engines with generative AI, the project sought to generate answers that were well-grounded in trusted document collections, ensuring accuracy and reliability.

To achieve these objectives, the project involved several key steps. It began with designing methods to evaluate search engine performance using LLMs, followed by enhancing the models’ ability to analyze and adjust search engine parameters. The team then focused on integrating LLMs with search engines and testing these interactions to optimize performance. Additionally, grounding mechanisms were developed to ensure that generated content was supported by trusted sources. The system’s performance was continuously monitored and iterated to refine its accuracy and reliability over time.

Outcomes

By integrating AI tools into the research and learning process, the team gained a more comprehensive understanding of the practical applications of AI in real-world scenarios, particularly in how AI can be utilized to improve research efficiency, generate insights, and automate complex tasks. This hands-on experience with AI technologies allowed them to see the direct impact of AI on the work, making abstract concepts more tangible and easier to grasp.

Additionally, learning about AI and its capabilities made students think critically about the ethical implications, limitations, and potential biases of using such technology. This critical thinking extended to understanding the importance of data quality, the nuances of machine learning models, and the necessity of transparency and accountability in AI applications.

The integration of AI into learning is unavoidable for students, given that we work in the field of AI. As AI continues to evolve, understanding its tools, methods, and applications becomes essential for anyone pursuing a career in this area. Engaging with AI not only helps students grasp the core concepts of their studies but also prepares them for the future landscape of the industry, where AI knowledge and skills will be increasingly vital.

By working directly with AI technologies, students gain hands-on experience that deepens their understanding of both theoretical and practical aspects of AI. This experience allows them to experiment with real-world applications, develop innovative solutions, and critically analyze the capabilities and limitations of AI systems. As a result, they become more adept at navigating the complexities of AI and more prepared to contribute meaningfully to advancements in the field.

This project achieved a significant quantitative milestone with the publication of a research paper titled Sequencing Matters: A Generate-Retrieve-Generate Model for Building Conversational Agents. The paper was presented at the 32nd Text Retrieval Conference (TREC 2023), held in Rockville, MD, USA, from November 14–17, 2023.

Team

Grace Hui Yang

Associate Professor, InfoSense Lab, Department of Computer Science, Georgetown Univerity

Sibo Dong

PhD student, InfoSense Lab, Department of Computer Science

Xubo Lin

PhD student, InfoSense Lab, Department of Computer Science

Zhaoming Wang

MS student, InfoSense Lab, Department of Computer Science

Liangyu Li

MS student, InfoSense Lab, Department of Computer Science

Quinn Patwardhan

Summer Research Intern, InfoSense Lab, Department of Computer Science